Are Today’s Virtual Assistants Dismantling Fundamental Rights to Privacy?

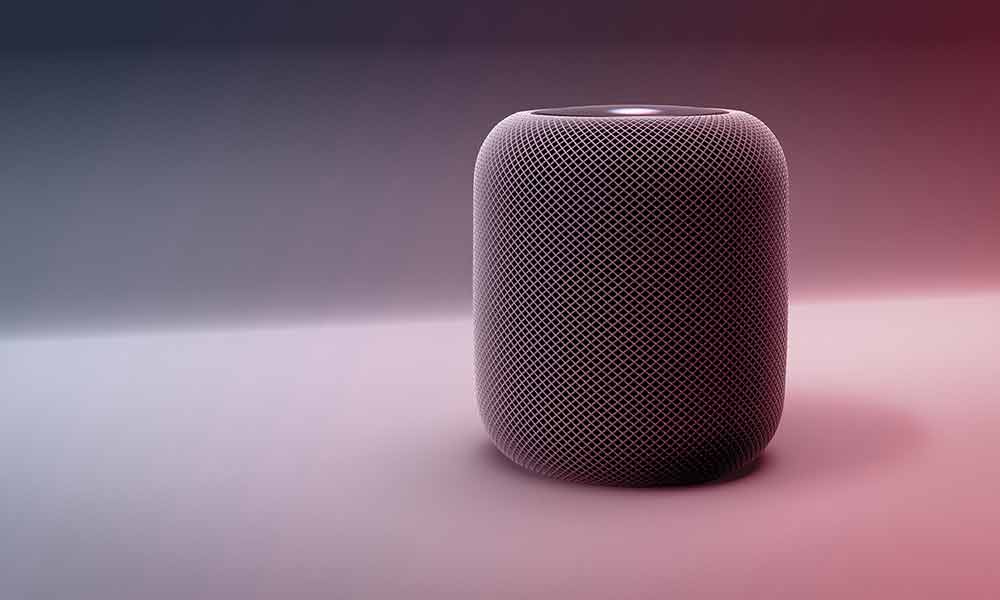

As technology continues to evolve at pace, tech that once seemed like it belonged in only the most futuristic of movies is now becoming part of our everyday lives. Voice assistants are a great example of this.

A far cry from the early virtual assistants that we saw appearing on the scene in the early ’60’s, today’s voice assistants have automated our homes and businesses, and revolutionised our shopping and searching behaviour more than perhaps anyone could have predicted.

But is this seemingly innocuous technology something that we should be inviting into our personal spaces so readily?

Innocent or Quite Naughty?

Although the many brands of voice assistants on the market are increasingly becoming major part of people’s lives, recent news stories suggest that they might not be as innocent as we might have thought.

Recently, reports have come to light that some of the major tech firms have been using human contractors to listen to our voice assistant recordings without either our knowledge or permission. There’s no doubt of the benefit to voice assistants to our businesses, but how can we be sure that these helpful assistants aren’t listening to things we’d rather stayed within our own four walls?

Ask Alexa

The first of the security breaches was uncovered when Bloomberg revealed that human contractors were being used for apparent quality control of Amazon’s personal assistant, Alexa.

Trawling through audio recordings that users had thought would only be heard by an artificial intelligence rather than human ears, the whistle-blowers reported listening to upsetting and even potentially criminal conversations.

Hey Google

But, if you’ve given permission for your voice assistant to make recordings of your conversations, should you be upset if these recordings are then heard? In these cases, it all comes down to whether these recordings were made with the user’s knowledge or not.

Google were also revealed to be listening to audio clips just like Amazon. However, it transpired that around 15% of these clips were made accidentally; inadvertently capturing sensitive information when the user had no idea that Google was listening.

Thank You Siri

Whilst it’s not unusual for tech firms to employ contractors to review their customer’s interactions with their technology in order to make improvements and refinements, the way in which the data has been collected seems to be at greatest fault here.

Although Apple has said that the recordings it analyses in order to improve the assistant as well as its dictation facilities are done in a much more privacy conscious way than that of its competitors; it still fails to match the expectation of its users with their practices.

The accidental triggering of the voice assistant is the biggest problem and has resulted in the greatest violations of privacy. Mishearing of homophones and ambient noise is common across all the assistants but, in the case of Siri, even the sound of zip has been misconstrued as a ‘wake word’.

In this case, can you trust that your assistant isn’t listening and transmitting recordings without you giving it a direct command? Even with fines of up to $40,000 being possible when personal information is recorded without the assistant’s wake word being used, is this enough compensation for our privacy being violated by the technology we have invited into our homes and businesses?

Are You Listening?

Facebook Messenger, Cortana and Skype (when using the apps translation feature) have also been reported to have captured much more personal information than its users ever realised. Even when permission for recordings to be made was granted by the user, it’s doubtful that they ever suspected that the audio clips would ever be listened to by a human quality control.

However, although it can seem that voice activated technology raises huge privacy concerns for businesses, there are simple steps you can take to prevent your interactions with them being recorded and analysed by external companies. This is great news when it comes to taking advantage of the huge productivity wins that voice assistant automation can have for your business.

Let NECL Help you Secure your Privacy

The so-called ‘grading system’ of having human contractors listen to our recorded audio files may be paused at Google and Apple for the time being, but the reality is that they will more than likely return to this practice in the future; albeit with a clearer opt-in system.

Thankfully there are easy steps you can take yourself to prevent Google, Amazon, Apple and Microsoft from collecting your recordings. Changing settings in Activity Control pages or Privacy Settings, depending on the assistant you are using, are methods you can implement yourself to protect your privacy.

If you’d like to know more, one of our experts at NECL can guide you through the steps you need to take to keep those conversations within the privacy of your office. Give us a call on 020 3664 636, or get in touch with us via our form on our contact page.